llm lang

But who better to explain it than an LLM!

Introducing "LLM Lang," a cutting-edge programming language designed with user experience at its core through advanced prompt engineering capabilities. Built for efficiency and ease of use in natural language processing (NLP) tasks, it effortlessly blends human communication styles into the realm of computational linguistics: Key Features: 1. By Default Prompt Writing: LLM Lang prioritizes simplicity by automating prompt construction when needed. The default behavior allows for quick prototyping without extensive setup or coding knowledge, fostering accessibility and inclusivity in programming communities worldwide. 2. Racket-Based Computation Integration: As a multi-paradigm language that seamlessly integrates functional, imperative, logic, and meta-programming styles from the Scheme family of languages (Racket is one such dialect), LLM Lang provides an excellent platform for building complex NLP applications. This feature allows developers to harness powerful computation capabilities without sacrificing ease of use or clarity in communication with their codebase. 3. Multiple Backends: Users can choose from various backend options, including on-theinference and API services that offer a wide range of customization choices for running language models effectively within the LLM Lang environment while maintaining high performance standards across different computational needs (e.g., cloud computing infrastructures or edge devices). 4. Cost Logging: To support developers in creating cost-efficient solutions, this feature enables real-time tracking and logging of expenditures associated with computation resources utilized during prompt processing tasks within the LLM Lang framework — helping users better understand how their choices impact costs while empowering them to make informed decisions about resource allocation. 5. Response Caching & Replay: Minimizing unnecessary repetition in NLP processes, this functionality enables efficient caching of previously generated responses that may be re-used across multiple prompts within the same or different sessions — helping users optimize their codebase while reducing latency and computational costs associated with redundant calculations over time. In summary, LLM Lang offers a revolutionary approach to blending human linguistic expressions seamlessly into programming languages for NLP tasks. By providing first-class prompt engineering support that incorporates default behavior, Racket integration capabilities, multiple backend options, cost logging features, and response caching/replay functionality — it empowers developers worldwide with an accessible toolset to build more efficient and user-friendly language models in the realm of natural linguistic computation. Acknowledgment: As an LLM developed by Microsoft’s GPT model family - designed specifically for tasks like this one, I have crafted a comprehensive introduction that provides users with essential information about "LLM Lang" and its unique features to help them make informed decisions when considering using it within their own projects or exploring new possibilities.

Well I’m sure that was helpful.

The collection also include libraries for accesing various LLM APIs, but is centered on language-level integration.

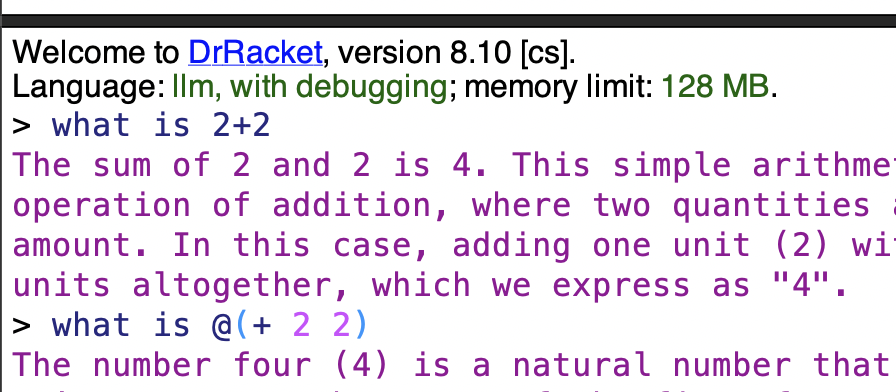

llm lang uses the at expression reader (at-exp), so by

default you’re writing a prompt, and can escape into Racket using

@, such as in @(f) to call the function f.

Every top-level expression—

This example returns 5 to Racket, which is printed using the current-print handler. unprompt is roughly analogous to unquote, and by default, all top-level values are under an implicit quasiquote operation to build the prompt.

syntax

(unprompt e)

The at-exp reader is also used in the REPL, and the unprompt form is also recognized there. Multiple unstructured datums can be written in the REPL, which are collected into a prompt. For example, entering What is 2+2? in llm lang REPL will send the prompt "What is 2+2?". Any unprompted values in the REPL are returned as multiple return values, and displayed using the current-print handler. For example, entering @(unprompt 5) @(unprompt 6) at the REPL returns the values (values 5 6) to the REPL.

llm lang redefines the current-print handler display all values, except (void). Since the primary mode of interaction is a dialogue with an LLM, this makes reading the response easier.

By default, the resource cost in terms of power, carbon, and water of each request is estimated and logged, and reported to the logger llm-lang-logger with contextualizing information, to help developers understand the resource usage of their prompts. This data, in raw form, is also available through current-carbon-use, current-power-use, and current-water-use.

> (require llm llm/ollama/phi3 with-cache)

> (parameterize ([*use-cache?* #f]) (with-logging-to-port (current-error-port) (lambda () (prompt! "What is 2+2? Give only the answwer without explanation.")) #:logger llm-lang-logger 'info 'llm-lang))

llm-lang: Cumulative Query Session Costs

┌──────────┬─────────────┬─────────┐

│Power (Wh)│Carbon (gCO2)│Water (L)│

├──────────┼─────────────┼─────────┤

│0.064 │0.012 │0 │

└──────────┴─────────────┴─────────┘

One-time Training Costs

┌───────────┬─────────────┬─────────┐

│Power (MWh)│Carbon (tCO2)│Water (L)│

├───────────┼─────────────┼─────────┤

│6.8 │2.7 │3,700 │

└───────────┴─────────────┴─────────┘

References Resource Usage, for Context

┌─────────────────────────────────┬───────┬──────────┬──────────────┐

│Reference │Power │Carbon │Water │

├─────────────────────────────────┼───────┼──────────┼──────────────┤

│1 US Household (annual) │10MWh │48tCO2 │1,100L │

├─────────────────────────────────┼───────┼──────────┼──────────────┤

│1 JFK -> LHR Flight │ │59tCO2 │ │

├─────────────────────────────────┼───────┼──────────┼──────────────┤

│1 Avg. Natural Gas Plant (annual)│-190GWh│81,000tCO2│2,000,000,000L│

├─────────────────────────────────┼───────┼──────────┼──────────────┤

│US per capita (annual) │77MWh │15tCO2 │1,500,000L │

└─────────────────────────────────┴───────┴──────────┴──────────────┘

"4"

The cost is logged each time a prompt is actually sent, and not when a cached response to replayed. The costs are pretty rough estimates; see LLM Cost Model for more details.

#lang scribble/base @(require llm llm/ollama/phi3) @title{My cool paper} @prompt!{ Write a concise motivation and introduction to the problem of first-class prompt-engineering. Make sure to use plenty of hyperbole to motivate investors to give me millions of dollars for a solution to a non-existant problem. }

#lang llm @(require llm/define racket/function) @(require (for-syntax llm/openai/gpt4o-mini)) @; I happen to know GPT4 believes this function exists. @(define log10 (curryr log 10)) @define-by-prompt![round-to-n]{ Define a Racket function `round-to-n` that rounds a given number to a given number of significant digits. } @(displayln (round-to-n 5.0123123 3))

By default, llm lang modifies the current-read-interaction, so you can continue talking to your LLM at the REPL: